Regulating AI Systems: the EU AI Act

Regulating AI Systems: the EU AI Act

In our previous articles, we explained trustworthy artificial intelligence (AI) and AI audit. In the next few articles, we will cover the legal frameworks AImed to promote trustworthy AI. We will start with the EU AI Act, which has been unveiled more than a year ago.

EU AI Act will have binding legal force throughout every Member State and enter into force on a set date in all the Member States since it is not a directive, but a regulation. It is not binding law yet and the exact date it will enter into force has not been determined, but it is estimated that it will be implemented by the end of 2024 or the beginning of 2025.

Definition of AI in the EU AI Act

Article 3 provides the definition of 44 terms which includes the term ‘artificial intelligence system’. AI system is defined in a way that covers a broad range of applications:

“…software that is developed with [specific] techniques and approaches [listed in Annex 1] and can, for a given set of human-defined objectives, generate outputs such as content, predictions, recommendations, or decisions influencing the environments they interact with.” [1].

Annex 1 provides a list of current techniques and approaches:

“ a. Machine learning approaches, including supervised, unsupervised and reinforcement

learning, using a wide variety of methods including deep learning,

b. Logic- and knowledge-based approaches, including knowledge representation,

inductive (logic) programming, knowledge bases, inference and deductive engines,

(symbolic) reasoning and expert systems;

c. Statistical approaches, Bayesian estimation, search and optimization methods.” [1].

Hence, the software-based systems covering machine learning, logic and knowledge-based and statistical approaches are accepted as AI systems according to AI Act (AIA). Also, AIA AIms to cover techniques and approaches that are likely to be developed in the future, so Article 4 points out that the list in Annexe 1 can be updated through delegated acts [1].

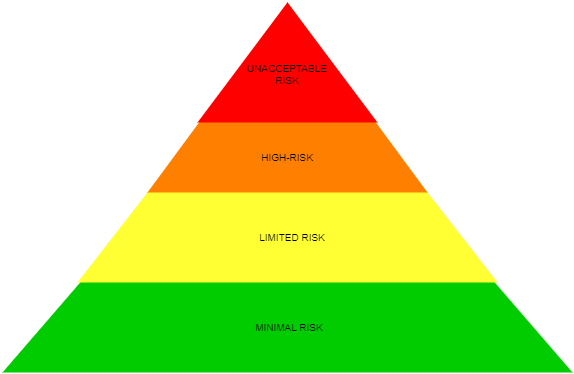

Risk-based Approach

AIA adopts a risk-based approach and divides AI systems into four categories: unacceptable risk, high-risk, limited risk and low or minimal risk systems.

- Unacceptable Risk: AI systems that fall in this category are considered threatening to fundamental rights and safety since they create unacceptable risks. AIA provides a list of AI systems producing unacceptable risks and prohibits to use and placing them on the market in the EU. Prohibited AI systems are:

- Manipulative systems using subliminal techniques

- AI systems exploiting specific vulnerable groups

- Social scoring systems

- Real-time remote biometric identification systems (with certain limited exceptions) [1].

- High-risk: AI systems that fall under this category affect fundamental rights and safety adversely. Article 6 classifies high-risk AI systems into two categories:

- AI systems used as safety components of products or as products covered by the list of Union harmonisation legislation provided in Annex 2 [1]. For instance, safety components of machinery and medical devices fall under this category.

- AI systems deployed in the areas listed in Annex 3:

- Biometric identification and categorisation of natural persons,

- Management and operation of critical infrastructure,

- Education and vocational training,

- Employment, workers management and access to self-employment,

- Access to and enjoyment of essential private services and public services and benefits,

- Law enforcement,

- Migration, asylum and border control management,

- Administration of justice and democratic processes [1].

Also, Article 7 points out that this list can be updated through delegated acts [1].

AIA introduces some obligations for providers, users, operators, importers, distributors and any other third parties of high-risk AI systems. Providers can indicate the compliance of an AI system to AIA by affixing a CE marking to it, which is an obligation of providers according to Article 16 [1].

- Limited Risk: AI systems that fall under this category require to meet limited transparency rules. Article 52 suggests four types of limited risk AI systems:

- AI systems interacting with people,

- Emotion recognition systems,

- Biometric categorisation systems,

- AI systems generating or manipulating image, audio or video content [1].

These limited risk AI systems are subject to the following transparency obligations respectively:

- Notifying the people that they are in interaction with an AI system,

- Informing the people about the operation of emotion recognition systems,

- Informing the people about the operation of biometric categorisation systems,

- Disclosing the artificial nature of content [1].

However, limited risk AI systems are not subject to these obligations if the systems are permitted by law to detect, prevent and investigate criminal offences [1]. This exception does not cover emotion recognition systems.

- Low or Minimal Risk: AI systems that do not fall under previous categories are low or minimal risk AI systems. They are not required to conform to new legal obligations by AIA to be used and developed. However, Article 69 encourages to create voluntary codes of conduct for non-high-risk AI systems [1].

Scope of Application

EU AI Act will apply to:

- Providers of AI systems that place AI systems on the EU market or put them into service in the EU, whether or not they are established within the Union,

- Users of AI systems located in the EU,

- Providers or users in third countries if outputs of AI systems are used in the EU [1].

Exemptions:

- AI systems developed or used exclusively for military purposes,

- Public authorities in third countries and international organisations using AI systems in the framework of international agreements for law enforcement and judicial cooperation [1].

Yazan : Gamze Büşra Kaya

Reference

[1] Artificial Intelligence Act. (21 April 2021). “Proposal for a regulation of the European Parliament and the Council laying down harmonised rules on Artificial Intelligence (Artificial Intelligence Act) and amending certain Union legislative acts.” EUR-Lex - 52021PC0206 https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELLAR:e0649735-a372-11eb-9585-01aa75ed71a1.