What does AI Ethics say about the Potential of AI for Sustainable Development Goals?

I: Negative Impacts

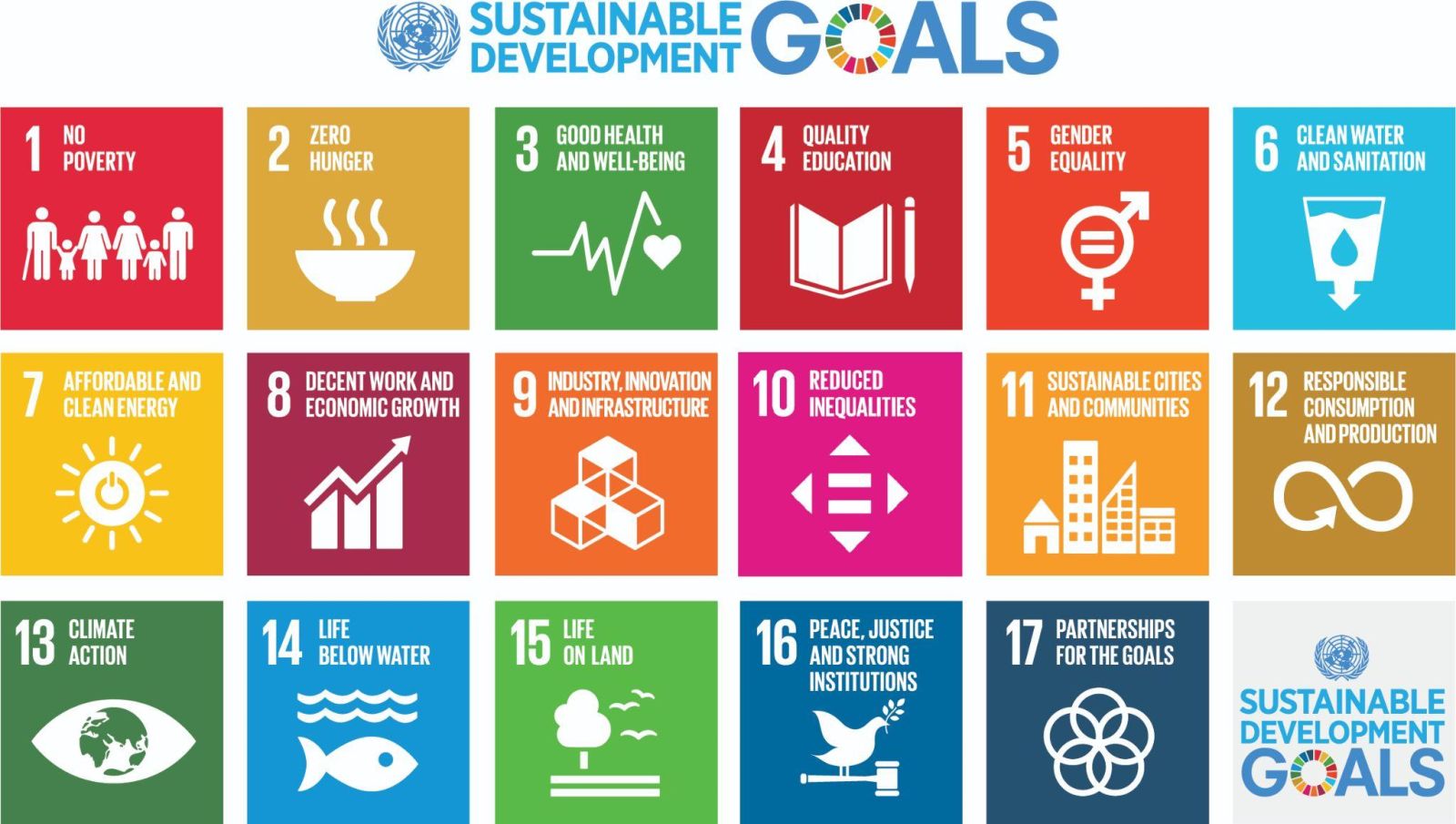

The Sustainable Development Goals (SDGs), also known as the Global Goals, are a set of 17 interconnected goals established by the United Nations in 2015 [1]. They provide a comprehensive framework for addressing a wide range of global challenges and promoting sustainable development across economic, social, and environmental dimensions. The SDGs were designed to be achieved by 2030 and aim to create a better world for all people, while also safeguarding the planet.

Nevertheless, the UN Secretary-General's report published in May 2023 shows that most of the SDGs are in danger [2]. So, if we continue in the same way, it will be impossible to fulfill the SDGs by 2030. At this point, the question of whether artificial intelligence (AI), which AI Ethics emphasizes should be beneficial to humans, can be the solution comes to mind. However, it seems that AI has the potential both to advance and undermine the implementation of SDGs. So, our first question should be: Can AI genuinely contribute to achieving the SDGs, or does AI make it more difficult to attain SDGs that are already far from realization?

How can AI negatively impact the achievement of the SDGs?

Environmental impacts

In the current situation, AI, especially generative AI, seems to do more harm than good in terms of global water challenge, climate change and energy consumption. For instance, AI has a huge water footprint. Data centers in which AI models are trained and deployed consume enormous amounts of water for data center cooling and electricity generation. It is estimated that training GPT-3 in Microsoft's US data centers leads to the consumption of 700,000 liters of clean freshwater and if it were trained in Microsoft’s Asian data centers it would be 2,100,000 liters [3]. Also, when the estimation of off-site water footprint of 2.8 million liters for electricity generation is added, the total water footprint for training becomes 3.5 million liters for training in the US, or 4.9 million liters for training in Asia [3]. Moreover, based on the deployment time and place of ChatGPT, it needs to “drink” half a liter of water every 20-50 questions and answers [3]. Researchers anticipate that these figures are likely to rise substantially for GPT-4.

Furthermore, it is estimated that the training of GPT-3 consumed 1.287 megawatt hours of energy and produced 552.1 tons of carbon dioxide equivalent [4], “the equivalent of 123 gasoline-powered passenger vehicles driven for one year.” [5]. These numbers solely depict the process prior to any consumers beginning to use and are likely to be higher for GPT-4. In the absence of sustainable practices in AI, AI is predicted to consume more energy than the collective human workforce by 2025, thereby offsetting the carbon zero gains [6].

Can the negative environmental impacts be mitigated?

Despite the challenges appearing severe and substantial, we might find solutions. One of the most crucial requirements to start addressing the problems is ensuring awareness and transparency. Mitigating these adverse effects through technical advancements, which can be further enhanced, is possible by promoting awareness about the development of environmentally friendly and sustainable AI, and ensuring transparency regarding the current environmental impacts of AI development and use.

Reducing the carbon footprint of training these models is possible. For instance, BLOOM, a 176 billion parameter language model, consumed 433 megawatt hours of energy and produced 30 tons of carbon dioxide equivalent [7]. This is promising because BLOOM is similar in size to GPT-3, which has 175 billion parameters. Also, research shows that carbon footprint can be declined by 100 to 1000 times based on the chosen deep neural network (DNN), data center, and processor [4].

Although there is not as much awareness about water footprint as there is about carbon footprint, initial research seems promising. The Water Usage Effectiveness (WUE), a measure of water efficiency, changes across different spaces and time periods, which indicates that making careful decisions about the timing and location for training a large AI model can significantly reduce its water use [3].

If tech companies become more transparent regarding the amount of water and energy consumed, and carbon emitted during the entire life cycle of AI, the environmental harms can be mitigated. For instance, AI model developers can enhance their ability to schedule model training effectively and make informed decisions regarding the deployment locations for their trained models by having access to information about the data center's runtime water efficiency or individuals who prioritize water conservation might opt to utilize AI services during periods of heightened water efficiency [3].

Moreover, in the absence of transparency on these matters, the ability to hold companies accountable and get them to engage in substantial reductions becomes unattainable, and consumers lack the capacity to select AI systems that pose a lesser negative impact on the climate and environment, or even to abstain from using such systems entirely [8]. Finally, transparency can contribute to the research community's effort on environmentally friendly and sustainable AI and help policymakers regulate the adverse impacts of AI on the environment.

Social and economic impacts

Besides AI’s adverse effects on the environment, it seems to have a huge potential to undermine the social and economic SDGs, too. Some social and economic implications of AI such as algorithmic bias and discrimination, the use of deep fakes for harmful goals, disinformation, automation of certain jobs and the digital divide potentially pose a danger for some social and economic SDGs such as “Gender Equality”, “Reduced Inequalities”, “Peace, Justice and Strong Institutions” and “Decent Work and Economic Growth”. To illustrate, algorithmic bias and discrimination can perpetuate and even exacerbate existing inequalities, and lead to less just societies and institutions.

Furthermore, the widespread dissemination of deepfakes and disinformation has the potential to substantially undermine trust, given that individuals may struggle to discern whether an image, text, sound, or video is authentic or artificially created. The long term effect of the growing prevalence of deepfakes and misinformation could be profoundly damaging to trust in institutions and individuals [8].

Finally, the economic implications of AI might include excessive job automation, fueling inequality, inefficiently driving down wages, and failing to enhance productivity [9]. That is, although the often emphasized danger is job loss, it is worth noting that potential risks extend beyond mere job loss, encompassing the devaluation of labor and an exacerbation of economic disparities.

Can the negative social and economic impacts be mitigated?

The negative social impacts of AI are likely the most extensively contemplated topic within AI Ethics and especially algorithmic bias and discrimination have been among the most prioritized and attention-grabbing issues. Proposals of regulations and ethical frameworks specifically focus on these issues, while tools such as risk and impact assessments and bias audits aim to address and mitigate these issues. Effective use of these tools, enforcement of regulations, the increase in the number of regulations dealing with these issues in the world, and adherence to ethical frameworks during the development and use of AI systems can mitigate these risks.

Furthermore, regarding the issue of the widespread dissemination of deepfakes and disinformation, some technical solutions are suggested such as adding watermarks or labels to synthetic contents. However, this cannot completely solve the problem. These solutions can be bypassed, such as by capturing a screenshot, removing visual watermarks by cropping, and removing attempts at adding watermarks to synthetic images from the open source models [8]. That is, this problem cannot be solved totally just by adding technical layers, particularly when content is intentionally misleading, so exploring alternative approaches, like robust media literacy and trusted media institutions, and substantial attention from media and social sciences researchers to this issue is significant [8].

Finally, in a world where the potential benefits of AI, such as freeing people from repetitive tasks and enhancing productivity, are talked a lot about, but without a proper focus on how to achieve it correctly, it can be very easy to overlook the potential economic harms of AI and unintentionally turn its potential benefits into a disaster. For this reason, engaging in AI regulation and steering AI research towards domains in which AI can generate new tasks to enhance human productivity, as well as new products and algorithms to empower workers [9], offers a strategy for averting the potential economic harms of AI.

Conclusion

AI brings many risks to SDGs, but it seems that these risks can be mitigated. By bringing attention to overlooked or less emphasized issues, fostering awareness in these matters, and directing the focus of research communities towards the development and use of ethical AI that addresses the challenges posed by these issues, measures can be taken to mitigate these risks. In addition, indeed, it is necessary for technology companies to enhance their commitment to ethical principles and values, provide necessary information to research communities, policymakers and the public in a transparent way, and regulations are required to ensure the implementation of them.

Considering the current state of humanity and the planet, it is obvious that AI must provide more benefits than harms to both people and the planet. In our next article, while keeping this fact in mind, we will focus on the question of whether AI can contribute to achieving SDGs.

References

[1] United Nations. (n.d.). Take action for the sustainable development goals - united nations sustainable development. United Nations. https://www.un.org/sustainabledevelopment/sustainable-development-goals/

[2] General Assembly Economic and Social Council advance ... - United nations. (2023, May). https://hlpf.un.org/sites/default/files/2023-04/SDG%20Progress%20Report%20Special%20Edition_0.pdf

[3] Li, P., Yang, J., Islam, M. A., & Ren, S. (2023, April 6). Making AI less “thirsty”: Uncovering and addressing the secret water footprint of AI models. arXiv.org. https://arxiv.org/abs/2304.03271

[4] Patterson, D., Gonzalez, J., Le, Q., Liang, C., Munguia, L.-M., Rothchild, D., So, D., Texier, M., & Dean, J. (2021, April 23). Carbon emissions and large neural network training. arXiv.org. https://arxiv.org/abs/2104.10350

[5] Saenko, K. (2023, May 25). A computer scientist breaks down generative AI’s Hefty Carbon Footprint. Scientific American. https://www.scientificamerican.com/article/a-computer-scientist-breaks-down-generative-ais-hefty-carbon-footprint/

[6] Gartner unveils top predictions for IT organizations and users in 2023 and beyond. Gartner. (n.d.).

[7] Luccioni, A. S., Viguier, S., & Ligozat, A.-L. (2022, November 3). Estimating the carbon footprint of bloom, a 176B parameter language model. arXiv.org. https://arxiv.org/abs/2211.02001

[8] Ghost in the machine . (2023, June). https://storage02.forbrukerradet.no/media/2023/06/generative-ai-rapport-2023.pdf

[9] Acemoglu, D. (2021, September). Harms of AI - National Bureau of Economic Research. https://www.nber.org/system/files/working_papers/w29247/w29247.pdf

Writer : Gamze Büşra Kaya