The Need for Trustworthy Artificial Intelligence

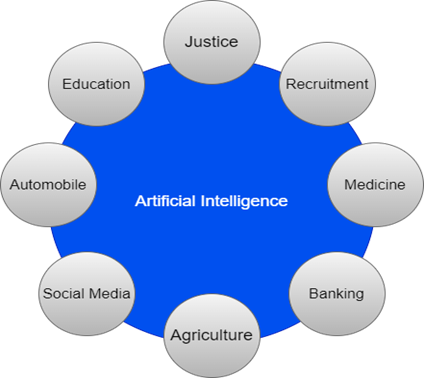

Artificial intelligence systems are increasingly used in various areas of life such as the justice system, recruitment, medicine and banking and are shaping these areas. They have the potential to provide solutions to many problems of humans and offer great benefits, and they have already started to be beneficial for people in many areas.

But along with the benefits, it also brings new ethical, legal and social challenges [1]. There are problems arising from the use of artificial intelligence systems. Some examples of these problems are that the "COMPAS" algorithm, which is used to estimate the risk of recidivism, is biased against blacks, a facial recognition software labels blacks with inappropriate labels, and an AI recruiting tool is biased against women [2]. Although these problems are striking examples, they show that the careless use of artificial intelligence systems threatens values such as justice and equality that shape social life. Ethical, legal and social problems arising from the use of artificial intelligence systems can be caused by the unreliable behavior of the systems and the fact that they are not interpretable and explainable. There are concerns arising from the use of systems, as bias misleads the black-box system and the complexity of the systems makes how decisions are made incomprehensible [2].

These concerns prevent the various kind of use of artificial intelligence systems. For example, although artificial intelligence-based medical diagnostic support systems are very useful, they are not widely trusted and accepted by health professionals because they are not interpretable [2]. That is, difficulties may arise due to the use of systems, and systems that can be very useful for people are not used much because they cannot gain people's trust. In order to take full advantage of artificial intelligence systems, the ethical, legal and social challenges they may cause must be overcome, ensure that they do not harm people, and trust must be built among people. In this context, different approaches have been put forward, such as beneficial AI, responsible AI, and ethical AI, which aim to advance AI in a way that maximizes benefits while reducing or preventing risks [1].

One of the terms coined for a similar purpose is trustworthy AI. The term trustworthy AI began to be adopted after the independent High Level Expert Group on Artificial Intelligence of the European Commission published the “Ethics Guidelines for Trustworthy AI” [1]. Trustworthy AI is based on the idea that to realize AI’s full potential, trust must be established in it [1]. It is a globally accepted concept. Gartner's predictions illustrate the importance of the need for trustworthy AI. It is estimated that by 2025, 30% of all AI-based digital products will need to use a trustworthy AI framework, and 86% of users will trust and remain loyal to companies that use ethical AI principles [2].

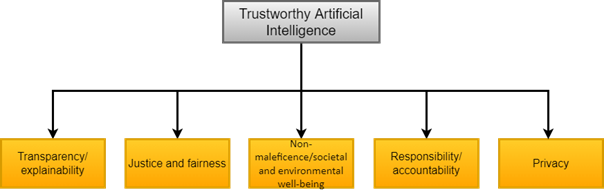

However, there is no consensus on what makes AI trustworthy, and the requirements for trustworthy AI are not equally prioritized worldwide [2]. For this reason, some researchers have examined the principles proposed for the framework of trustworthy AI and identified five principles that are recommended more frequently than others: “transparency/explainability, justice and fairness, non-maleficence/societal and environmental well-being, responsibility/accountability, and privacy” [2].

Recommended Principles for the Trustworthy AI Framework

This section will examine definitions of the five most frequently recommended principles in the framework of trusted AI and why they are needed. First, the principle of fairness requires that AI systems do not discriminate against any person or group in society [2]. AI systems can lead to bias and injustice if they are not properly designed, developed, and implemented [2]. AI systems must comply with the principle of fairness so that they do not lead to bias and injustice. The principle of explainability requires “the explanations of decisions made based on automatic and algorithms, the data leading to these decisions and the information obtained from that data; why, how, where and for what purpose it is used, to the users and other stakeholders in non-technical terms and in plain language [3]. To gain trust for the decisions made by AI systems, it is essential that the different stakeholders involved in these systems understand the reasons that led to the decisions [2]. So, being compatible with the principle of explainability is necessary to build trust in AI systems. Accountability requires the distribution of responsibility among stakeholders [2]. The need for accountability stems from the use of algorithmic decision making in a variety of high-risk applications, and accountability is required to ensure these systems are safe and reliable [2]. The principle of privacy ensures that sensitive data shared by an individual or collected by an artificial intelligence system is protected against any unfair or illegal data collection and use [2]. Protecting privacy is crucial to gain users' trust in the system. Data breaches can lead to misuse of information and data, which can reduce users' trust in the system [2]. For this reason, the privacy of the system and users must be ensured to build trust. Finally, the non-maleficence principle requires that artificial intelligence systems are developed and used in a way that does not harm humans [1]. AI systems harming people will prevent people from trusting them. For systems to be trusted, accepted and widely used, systems must be campatible with the principle of non-maleficence.

Writer : Gamze Büşra Kaya

References

[1] S. Thiebes, S. Lins, and A. Sunyaev, “Trustworthy Artificial Intelligence,” Electronic Markets, vol. 31, no. 2, pp. 447–464, 2020.

[2] D. Kaur, S. Uslu, K. J. Rittichier, and A. Durresi, “Trustworthy Artificial Intelligence: A Review,” ACM Computing Surveys, vol. 55, no. 2, pp. 1–38, 2023.

[3] “Ulusal Yapay Zekâ Stratejisi (UYZS) 2021-2025- cbddo.gov.tr.” [Online]. Available: https://cbddo.gov.tr/SharedFolderServer/Genel/File/TR-UlusalYZStratejisi2021- 2025.pdf. [Accessed: 8-Sep-2022].